MP130 Maple Leaf and Debian

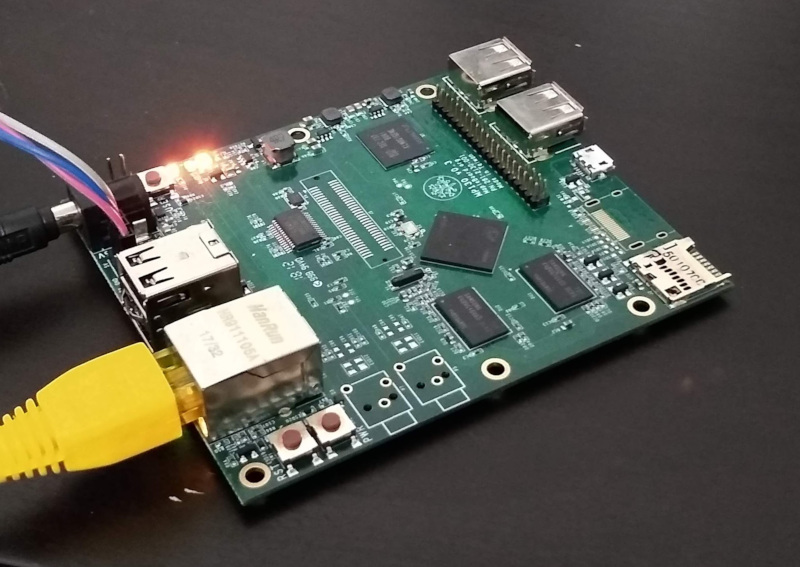

Like any conference one of the nice things about DebConf is the random interesting people you meet. At the DebConf18 conference dinner I had the pleasure of talking to Jia-Bin Huang, from Centrum Embedded Systems. He told me about how he was using Debian as the basis for his MapleBoard (mostly in Chinese, unfortunately) project, which is based on the Allwinner H3 and has thousands of shipped units. The next day I went to take a look at the website (Google Translate proved helpful), which has details of the board including full schematics of the board. I reached out to Jia-Bin to see if he was still at the conference and if he had any boards with him, but he’d already returned to Taipei. He generously offered to send me one and a few weeks later (mostly due to UK customs delays) I had an “Economy Edition” board in my hands.

Getting up and running was simple; the board came with a pl2303 based USB serial cable, but I found it a little unreliable so ended up using my own cable. The supplied documentation was in Chinese, but the login details were clearly visible - username mpl1, password 1234. After logging in I found it was running a lightly customized Debian Stretch variant, with the following packages not from the main Debian repository:

base-files 9.9+mp4 Maple base system miscellaneous files

debian-archive-keyring 2017.7+mp1 GnuPG archive keys of the Debian archive

distro-info-data 0.36+mp1 information about the distributions' releases (data files)

linux-image-4.15.2a… 4.15.2a… Linux kernel, version 4.15.2a-02769-g6d0ce60c8d56

maplewebde 0.1~rc4-2 Web interface to communicate with mapleboard

maplewebde seems to be a web interface for interacting with the board, but it’s in Chinese so I wasn’t able to make much sense of it. I was more interested in the kernel - how much customisation had been done, and how much was already mainlined. Happily the Linux sunxi folk have done a lot of great work in getting things upstream, so although the supplied kernel was using its own drivers (largely branded Mapleboard rather than Allwinner) all the necessary pieces were in mainline. I did a little bit of cleanup of the supplied device tree configuration file, and am pleased to say that as of 5.0-rc1 a multi_v7_defconfig will build a kernel and a sun8i-h3-mapleboard-mp130.dtb file which Just Work™ on the device.

What about the board itself? I haven’t thrown a lot at it, but it feels reasonably responsive compared to some other ARM boards I’ve played with. Quad 1GHz cores and 1GB RAM is a nice enough base spec, though it is ARMv7 so 32-bit only. Unfortunately the “Economy Edition” doesn’t have HDMI on board or I’d have seen about trying to use it as a media player - the video decoding engine apparently has Free drivers based on a vdpau backend, which is pretty cool. There’s no SATA, so it can’t be pressed into service as a build machine easily. I suspect in the long run I’ll press it into service as part of my home automation setup, somewhere I need more oompf than an ESP8266 but still want low power consumption - there are a number of GPIOs conveniently brought out to a 40 pin header.

In general terms of the target market my understanding is the board is largely pitched as a development board, with Centrum being prepared to do customisations for sufficiently sized runs. The open nature of the board, and availability of good support upstream (even if it’s come from the community rather than Allwinner) certainly makes it an attractive option if you’re looking for a 32-bit ARM platform, and although I’m not aware of any option other than ordering from Taiwan at present I’ve found Jia-Bin to be helpful with any queries I’ve had. Also I quite like supporting companies that are using Debian. :) Worth a look if you’re in the market for such a thing.

Maxcio W-UK007S Power Monitoring Smart Plug notes

The house server I built in 2013 is getting on a bit, so I’d like to replace it. That’s currently held up on availability of Ryzen 7 2700E CPUs, which look to be the best power consumption/performance trade-off available at present. While I wait for stock I figured I should see how the current i3-3220T is doing.

To do so I decided to buy a Smart Plug that advertised energy monitoring, planning to integrate it into my current setup for the monitoring and then being able to use it for general power control once the upgrade comparison is complete. I ended up with a pair of Maxcio Smart Plugs - pricing and availability worked out and I’d found confirmation that the W-US002S was ESP8266 based.

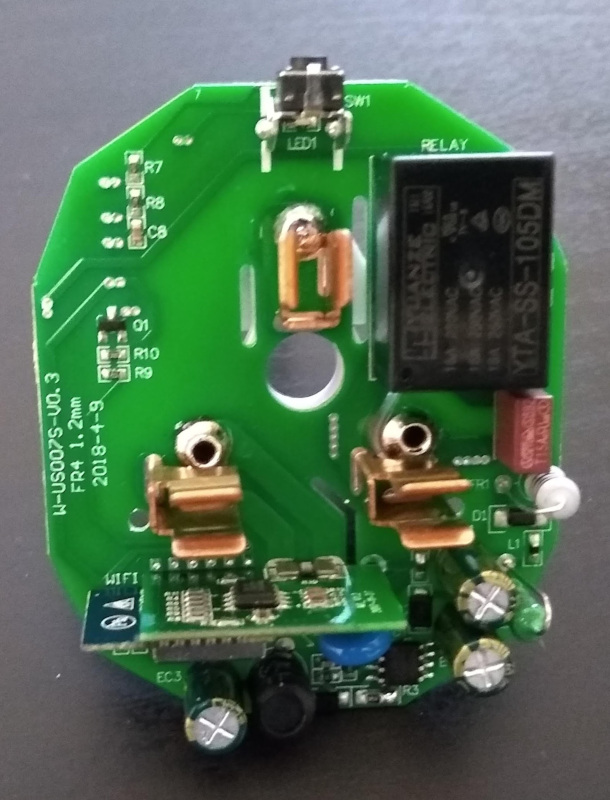

The model I ended up with is externally marked as a W-UK007S. It’s a fairly neat form factor (slightly smaller than the SonOff S26 I already have, which doesn’t do power monitoring). It also turned out to be easy to take apart; there is a circular cover in the middle which can be carefully popped out, revealing the single screw holding the device together.

The back plate has 4 clips holding it together at the corners and can be gently pried off. Inside there’s a main circuit board labelled “W-US0007S-V0.3” which has the relay on it and a perpendicular board with the ESP8266 module and power monitoring chip on it.

Sadly the layout didn’t match anything I was familiar with, or could find any details of. That meant I had to do some detective work to figure out how to talk to the ESP8266. It was easy enough to work out GND + VCC by following PCB tracks. Likewise the relay, the button and the LED (underneath the button, and separately controlled from the relay, unlike the S26). Next move was to hook up power (just a low voltage supply to GND/VCC, I did not engage in any experimentation involving mains voltages!) and monitor each unknown pin in turn in the hope I’d find TX (even if the supplied firmware didn’t print anything out the ESP8266 prints a message on boot, so I’d definitely see something if it was there).

Thankfully TX was brought out to the module connection to the main PCB, so I had something I could monitor.

Maxcio boot log

ets Jan 8 2013,rst cause:1, boot mode:(3,7)

load 0x40100000, len 1396, room 16

tail 4

chksum 0x89

load 0x3ffe8000, len 776, room 4

tail 4

chksum 0xe8

load 0x3ffe8308, len 540, room 4

tail 8

chksum 0xc0

csum 0xc0

2nd boot version : 1.4(b1)

SPI Speed : 40MHz

SPI Mode : QIO

SPI Flash Size & Map: 8Mbit(512KB+512KB)

jump to run user1 @ 1000

OS SDK ver: 1.4.2(23fbe10) compiled @ Sep 22 2016 13:09:03

phy v[notice]user_main.c:268 SDK version:1.4.2(23fbe10)

[notice]user_main.c:270 tuya sdk version:1.0.6

[notice]user_main.c:275 tuya sdk compiled at Jul 26 2017 15:27:36

[notice]user_main.c:277 BV:5.20 PV:2.1 LPV:3.1

reset reason: 0

epc1=0x00000000, epc2=0x00000000, epc3=0x00000000, excvaddr=0x00000000,depc=0x00000000

mode : softAP(de:4f:22:2c:76:93)

dhcp server start:(ip:192.168.4.1,mask:255.255.255.0,gw:192.168.4.1)

add if1

bcn 100

bcn 0

del if1

usl

mode : sta(dc:4f:22:2c:76:93)

add if0

scandone

[notice]device.c:1694 fireware info name:esp_hys_qx_single_light_jlplug version:1.0.0

[notice]device.c:1695 PID=gDYvLrWhRoqpgHMj

[notice]device.c:1696 FW Compiled date:Feb 5 2018

[notice]gw_intf.c:240 Authorization success

[notice]device.c:1722 ## AUTH DONE ##

[err]bl0937.c:1013 ### get_coefficient get err: 28

[err]device.c:1767 get ele data err...

[err]device.c:1772 get tem ele data err...

del if0

usl

mode : null

force slp enable,type: 2

fpm open,type:2 0

[notice]device.c:2041 # STAT_STA_UNCONN STAT_LOW_POWER#

[notice]device.c:2042 # STAT_STA_CONN #

[notice]device.c:648 #####I_VAL:0#####

[notice]device.c:654 ***************************Check Start 1**********************************

[notice]device.c:655 ### cur:0 mA power:0 W vol:0 V

[notice]device.c:656 ****************************Check Stop**********************************

Next I took the remaining unknown pins and tried grounding them on boot, in an attempt to find GPIO0 (which needs to be grounded to access the ROM serial bootloader). I ended up finding GPIO2 first, and then eventually figuring out the LED was using GPIO0 - learning the lesson not to assume pins don’t have multiple uses. Now I had TX + GPIO0 I could hold GPIO0 on boot and look for RX by probing the remaining pins and seeing if esptool could talk to the bootloader. Again, I was successful.

At that point I was able to download the firmware from flash, and poke it in the hope of working out the GPIO assignments (I’m a software guy, I’m happier with an assembly dump than probing randomly around a board in the hope of enlightenment). I generated a crude .elf from the flash dump using esp-bin2elf, hacking it up to cope better with an OTA ROM image and skip the boot loader. I initially used objdump to examine the result, which wasn’t that helpful, and then found ScratchABit, which made things much easier. What would have been ideal is some way to load in the .a static libraries from the SDK and automatically map those to the code; as well as providing some useful symbols it would have avoided work looking at functions that were irrelevant. The ESP8266 seems to want various levels of indirection to access functions and memory locations so it’s not just a simple matter of looking for an I/O request to a specific location, but I was able to figure out that the button was on GPIO13 and the relay on GPIO15.

All that left was the bit I was actually interested in - the power monitoring. The appropriate chip (clearly attached to a low resistance bridge from one of the AC power pins, and also attached to the other pin) on the PCB was marked “HJL-01 / J1749CYH / D797480E”. Whatever you find on the web this is not the same as the HLW8012. It’s very similar in operation but is actually a BL0937. Electrodragon’s Energy Meter page was the best resource I found - it has a link to the Chinese datasheet for the BL0937, which in combination with Google Translate allows the basic principles to be understood. Both devices work by having a pin (CF) which outputs pulses based on monitored power consumption, and a pin (CF1) which can be switched between monitoring current and voltage via a third pin (SEL). For the BL0937 you can just count pulses; the pulse width is fixed at 38µS and it’s just the frequency which varies. I’d found the GPIO interupt handler in my flash disassembly which indicated that GPIO5 was connected to CF and GPIO14 to CF1. Additionally the handler around GPIO14 needs to check which mode the chip is currently in, which let me discover GPIO12 was connected to this pin.

That resulted in the following pin mapping of the daughter PCB; the remaining 4 pins weren’t of interest once I had the ones I needed, so I didn’t do further investigation:

PCB EDGE

+----+

LED / GPIO0 |1 12| 3.3V

|2 11|

BUTTON / GPIO13 |3 10| GPIO2

TX |4 9| RX

RELAY / GPIO15 |5 8|

GND |6 7|

+----+

PCB CENTER

Of course the frequency values that come out of the BL0937 are not directly usable; there’s a certain amount of conversion/calibration that needs to be done. Thankfully although the datasheet has an equation that includes an odd constant value as well as the internal reference voltage this all boils down to a simple scaling value. I ended up using a multimeter to calibrate the voltage and then a standalone power meter + table lamp to calibrate power/current. Better results could be obtained with a constant voltage source and a known resistance load but this worked out close enough for my needs.

I wrote my own driver to fit within the ESP8266 MQTT framework I use, but if you’re looking for something more off the shelf ESPurna is probably a good start. Ticket #737 talks about adding support for the BL0937 (it’s close enough to the HLW8012 that you can get away with just changing the scaling factors), and the Homecube SP1 seems to use the same GPIOs for the various pieces of hardware.

I’ve put all the images from my teardown into a W-UK007S Teardown Album on Google Photos, in case it’s useful to anyone.

⬛⬛⬛⬛⬛⬛⬛⬛⬛⬛

We obsess over free speech. It is a club with which we beat anyone who we believe is trying to silence us, or otherwise make our opinion unheard. I live in Northern Ireland, so my legal right flows from the European Convention on Human Rights (ECHR) via a combination of the Human Rights Act 1998 (HRA) and the Northern Ireland Act 1998 s6(2) (NIA). Additionally the Charter of Fundamental Rights of the European Union applies in certain circumstances.

If you look at the ECHR the phrases “Free Speech” and “Freedom of Speech” do not actually appear. The relevant article is article 10, “Freedom of Expression”, which says:

Everyone has the right to freedom of expression. This right shall include freedom to hold opinions and to receive and impart information and ideas without interference by public authority and regardless of frontiers. This Article shall not prevent States from requiring the licensing of broadcasting, television or cinema enterprises.

The exercise of these freedoms, since it carries with it duties and responsibilities, may be subject to such formalities, conditions, restrictions or penalties as are prescribed by law and are necessary in a democratic society, in the interests of national security, territorial integrity or public safety, for the prevention of disorder or crime, for the protection of health or morals, for the protection of the reputation or rights of others, for preventing the disclosure of information received in confidence, or for maintaining the authority and impartiality of the judiciary.

Paragraph 1 is the one we trot out. Paragraph 2 doesn’t get so much airtime. The right to freedom of expression is not unqualified (compare Article 3 which prohibits torture and is short and succinct). There are recognised needs to curtail the freedom of expression. Even if you ignore state decisions such as “national security” or “protection of morals” it is still necessary to respect the right of others. The common tension is between the right to Freedom of Expression and the Right to respect for private and family life (Article 8), but prevention of disclosure of information received in confidence is also explicitly called out.

These qualifications on the right to freedom of expression shouldn’t seem surprising. Society is all about balancing the needs of everyone to try and achieve some sort of harmony. We can be generally in favour of a free press while also accepting that there need to be some limits on how much they can intrude into people’s personal lives. Equally just because one finds doxxing despicable doesn’t mean one is against freedom of expression. We need to stop the black and white view that any attempt to curtail freedom of expression, no matter what the context, is a violation of an unqualified fundamental right. Too much simplification of the details takes place.

The other thing that needs to be remembered is that this right relates to governments - “without interference by public authority”. It doesn’t say anything about what you as a private individual have to do to enable my right to freedom of expression. I can’t force you to read this blog, or provide me with aggregation on your site to help me reach a wider audience. Equally I don’t have to host comments that are spam, or that I find offensive. No one’s right to freedom of expression is impinged here; I can blog what I like if I’m the one hosting it, people can conduct their marketing in other forum without me being able to prevent them doing so.

We also don’t see any statement about what organisations have to do. The HRA talks explicitly about being able to review the actions of public authorities, but it is rightly silent on anything to do with private organisations. If you can’t be seen as acting as an arm of the government, the HRA doesn’t apply. Organisations don’t have to support the views of those they disagree with - just look at the split in newspapers over whether Brexit is a good thing or not - or things unrelated to the organisation (there’s no reason I would be syndicated on Planet GNOME). That might be disappointing to individuals, but trotting out Freedom of Expression as a defence or a justification for why an organisation should help promote our views just fails to understand the nature of the right.

I don’t make any points above that haven’t been made more eloquently by others countless times, but sometimes I feel we need to be reminded of them.

Enabling Wake-on-Lan with the N34 Mini PC

There is a room at the top of my house which was originally earmarked for storage (the loft is full of insulation rather than being a useful option). Then I remembered I still had my pico projector and it ended up as a cinema room as well. The pico projector needs really low light conditions with a long throw, so the fact the room only has a single small window is a plus.

I bought an “N34” mini PC to act as a media player - I already had a spare DVB-T2 stick to Freeview enable things, and the Kodi box downstairs has all my DVDs stored on it for easy streaming. It’s a Celeron N3450 based box with 4G RAM and a 32GB internal eMMC (though I’m currently running off an SD card because that’s what I initially used to set it up and I haven’t bothered to copy it onto the internal device yet). My device came from Amazon and is branded “Kodlix” (whose website no longer works) but it appears to be the same thing as the Beelink AP34.

Getting Linux onto it turned out to be a hassle. GRUB does not want to play with the EFI BIOS; it can be operated sometimes if manually called from the EFI Shell, but it does not work as the default EFI image to load. Various forum posts recommended the use of rEFInd, which mostly works fine.

Other than that Debian Stretch worked without problems. I had to pull in a backports kernel in order to make the DVB-T2 stick work properly, but the hardware on the N34 itself was all supported out of the box.

The other issue was trying to get Wake-on-Lan to work. The room isn’t used day to day so I want to be able to tie various pieces together with home automation such that I can have everything off by default and a scene configured to set things up ready for use. The BIOS has an entry for Wake-on-Lan, ethtool reported Supports Wake-on: g which should mean MagicPacket wakeup was enabled, but no joy. Looking at /proc/acpi/wakeup gave:

/proc/acpi/wakeup contents

Device S-state Status Sysfs node

HDAS S3 *disabled pci:0000:00:0e.0

XHC S3 *enabled pci:0000:00:15.0

XDCI S4 *disabled

BRCM S0 *disabled

RP01 S4 *disabled

PXSX S4 *disabled

RP02 S4 *disabled

PXSX S4 *disabled

RP03 S4 *disabled pci:0000:00:13.0

PXSX S4 *disabled pci:0000:01:00.0

RP04 S4 *disabled

PXSX S4 *disabled

RP05 S4 *disabled

PXSX S4 *disabled

RP06 S4 *disabled pci:0000:00:13.3

PXSX S4 *disabled pci:0000:02:00.0

PWRK S4 *enabled platform:PNP0C0C:00

pci:0000:01:00.0 is the network card:

01:00.0 Ethernet controller [0200]: Realtek […] Ethernet Controller [10ec:8168] (rev 0c)

I need this configured to allow wakeups which apparently is done via sysfs these days:

echo enabled > /sys/bus/pci/devices/0000\:01\:00.0/power/wakeup

This has to be done every boot so I just tied it into /etc/network/interfaces.

All of this then enables Home Assistant to control the Kodi box:

Home Assistant Kodi WoL configuration

wake_on_lan:

media_player:

- platform: kodi

name: Kodi (Cinema)

host: kodi-cinema.here

port: 8000

username: kodi

password: !secret kodi_cinema_pass

enable_websocket: false

turn_on_action:

service: wake_on_lan.send_magic_packet

data:

mac: 84:39:be:11:22:33

broadcast_address: 192.168.0.2

turn_off_action:

service: media_player.kodi_call_method

data:

entity_id: media_player.kodi_cinema

method: System.Shutdown

My Home Assistant container sits on a different subnet to the media box, and I found that the N34 wouldn’t respond to a Wake-on-Lan packet to the broadcast MAC address. So I’ve configured the broadcast_address for Home Assistant to be the actual IP of the media box, allowed UDP port 9 (discard) through on the firewall and statically nailed the ARP address of the media box on the router, so it transmits the packet with the correct destination MAC:

ip neigh change 192.168.0.2 lladdr 84:39:be:11:22:33 nud permanent dev eth0

I’ve still got some other bits to glue together (like putting the pico projector on a SonOff), but this gets me started on that process.

(And yes, the room is a bit cosier these days than when that photograph was taken.)

MQTT enabling my doorbell

One of the things about my home automation journey is that I don’t always start out with a firm justification for tying something into my setup. There’s not really any additional gain at present from my living room lights being remotely controllable. When it came to tying the doorbell into my setup I had a clear purpose in mind: I often can’t hear it from my study.

The existing device was a Byron BY101. This consists of a 433MHz bell-push and a corresponding receiver that plugs into a normal mains socket for power. I tried moving the receiver to a more central location, but then had issues with it not reliably activating when the button was pushed. I could have attempted the inverse of Colin’s approach and tried to tie in a wired setup to the wireless receiver, but that would have been too simple.

I first attempted to watch for the doorbell via a basic 433MHz receiver. It seems to use a simple 16 bit identifier followed by 3 bits indicating which tone to use (only 4 are supported by mine; I don’t know if other models support more). The on/off timings are roughly 1040ms/540ms vs 450ms/950ms. I found I could reliably trigger the doorbell using these details, but I’ve not had a lot of luck with reliable 433MHz reception on microcontrollers; generally I use PulseView in conjunction with a basic Cypress FX2 logic analyser to capture from a 433MHz receiver and work out timings. Plus I needed a receiver that could be placed close enough to the bell-push to reliably pick it up.

Of course I already had a receiver that could decode the appropriate codes - the doorbell! Taking it apart revealed a PSU board and separate receiver/bell board. The receiver uses a PT4318-S with a potted chip I assume is the microcontroller. There was an HT24LC02 I2C EEPROM on the bottom of the receiver board; monitoring it with my BusPirate indicated that the 16 bit ID code was stored in address 0x20. Sadly it looked like the EEPROM was only used for data storage; only a handful of values were read on power on.

Additionally there were various test points on the board; probing while pressing the bell-push led to the discovery of a test pad that went to 1.8v when a signal was detected. Perfect. I employed an ESP82661 in the form of an ESP-07, sending out an MQTT message containing “ON” or “OFF” as appropriate when the state changed. I had a DS18B20 lying around so I added that for some temperature monitoring too; it reads a little higher due to being inside the case, but not significantly so.

All of this ended up placed in the bedroom, which conveniently had a socket almost directly above the bell-push. Tying it into Home Assistant was easy:

binary_sensor:

- platform: mqtt

name: Doorbell

state_topic: "doorbell/master-bedroom/button"

I then needed something to alert me when the doorbell was pushed. Long term perhaps I’ll add some sounders around the house hooked in via MQTT, and there’s a Kodi notifier available, but that’s only helpful when the TV is on. I ended up employing my Alexa via Notify Me:

notify:

- name: alexa

platform: rest

message_param_name: notification

resource: https://api.notifymyecho.com/v1/NotifyMe

data:

accessCode: !secret notifyme_key

and then an automation in automations.yaml:

- id: alexa_doorbell

alias: Notify Alexa when the doorbell is pushed

trigger:

- platform: state

entity_id: binary_sensor.doorbell

to: 'on'

action:

- service: notify.alexa

data_template:

message: "Doorbell rang at {{ states('sensor.time') }}"

How well does this work? Better than expected! A couple of days after installing everything we were having lunch when Alexa chimed; the door had been closed and music playing, so we hadn’t heard the doorbell. Turned out to be an unexpected delivery which we’d otherwise have missed. It also allows us to see when someone has rang the doorbell when we were in - useful for seeing missed deliveries etc.

(Full disclosure: When initially probing out the mains doorbell for active signals I did so while it was plugged into the mains. My ‘scope is not fully isolated it seems and at one point I managed to trip the breaker on the mains circuit and blow the ringer part of the doorbell. Ooops. I ended up ordering an identical replacement (avoiding the need to replace the bell-push) and subsequently was able to re-use the ‘broken’ device as the ESP8266 receiver - the receiving part was still working, just not making a noise. The new receiver ended up in the living room, so the doorbell still sounds normally.)

-

I have a basic ESP8266 MQTT framework I’ve been using for a bunch of devices based off Tuan PM’s work. I’ll put it up at some point. ↩

subscribe via RSS