Notes on upgrading from Jessie to Stretch

I upgraded my last major machine from Jessie to Stretch last week. That machine was the one running the most services, but I’d made notes while updating various others to ensure it went smoothly. Below are the things I noted along the way, both for my own reference and in case they are of use to anyone else.

- Roundcube with the sqlite3 backend stopped working after the upgrade; fix was to edit

/etc/roundcube/debian-db-roundcube.phpand changesqlite3://tosqlite://in the$config['db_dsnw']line. - Dovecot no longer supports SSLv2 so had to remove

!SSLv2from thessl_protocolslist in/etc/dovecot/conf.d/10-ssl.conf - Duplicity now tries to do a mkdir so I had to change from the

scp://backend to thesftp://backend in my backup scripts. - Needed to add

needs_root_rights=yesto/etc/X11/Xwrapper.configso Kodi systemd unit could still start it on a new VT. Need to figure out how to get this working without the need for root. - Upgrading fail2ban would have been easier if I’d dropped my additions in

/etc/fail2ban/jail.d/rather than the master config. Fixed for next time. - ejabberd continues to be a pain; I do wonder if it’s worth running an XMPP server these days. I certainly don’t end up using it to talk to people myself.

- Upgrading 1200+ packages takes a long time, even when the majority of them don’t have any questions to ask during the process.

- PostgreSQL upgrades have got so much easier.

pg_upgradecluster 9.4 mainchugged away but did exactly what I needed.

Other than those points things were pretty smooth. Nice work by all those involved!

How to make a keyring

Every month or two keyring-maint gets a comment about how a key update we say we’ve performed hasn’t actually made it to the active keyring, or a query about why the keyring is so out of date, or told that although a key has been sent to the HKP interface and that is showing the update as received it isn’t working when trying to upload to the Debian archive. It’s frustrating to have to deal with these queries, but the confusion is understandable. There are multiple public interfaces to the Debian keyrings and they’re not all equal. This post attempts to explain the interactions between them, and how I go about working with them as part of the keyring-maint team.

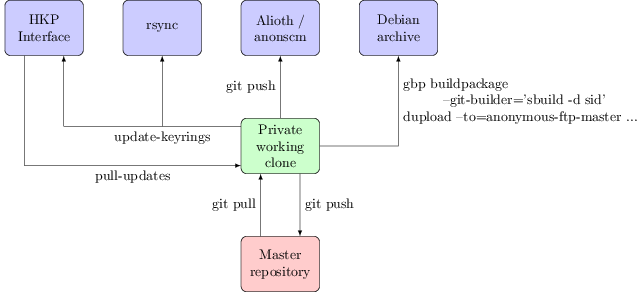

First, a diagram to show the different interfaces to the keyring and how they connect to each other:

Public interfaces

rsync: keyring.debian.org::keyrings

This is the most important public interface; it’s the one that the Debian infrastructure uses. It’s the canonical location of the active set of Debian keyrings and is what you should be using if you want the most up to date copy. The validity of the keyrings can be checked using the included sha512sums.txt file, which will be signed by whoever in keyring-maint did the last keyring update.

HKP interface: hkp://keyring.debian.org/

What you talk to with gpg --keyserver keyring.debian.org. Serves out the current keyrings, and accepts updates to any key it already knows about (allowing, for example, expiry updates, new subkeys + uids or new signatures without the need to file a ticket in RT or otherwise explicitly request it). Updates sent to this interface will be available via it within a few hours, but must be manually folded into the active keyring. This in general happens about once a month when preparing for a general update of the keyring; for example b490c1d5f075951e80b22641b2a133c725adaab8.

Why not do this automatically? Even though the site uses GnuPG to verify incoming updates there are still occasions we’ve seen bugs (such as #787046, where GnuPG would always import subkeys it didn’t understand, even when that subkey was already present). Also we don’t want to allow just any UID to be part of the keyring. It is thus useful to retain a final set of human based sanity checking for any update before it becomes part of the keyring proper.

Alioth/anonscm: https://anonscm.debian.org/git/keyring/keyring.git/

A public mirror of the git repository the keyring-maint team use to maintain the keyring. Every action is recorded here, and in general each commit should be a single action (such as adding a new key, doing a key replacement or moving a key between keyrings). Note that pulling in the updates sent via HKP count as a single action, rather than having a commit per key updated. This mirror is updated whenever a new keyring is made active (i.e. made available via the rsync interface). Until that point pending changes are kept private; we sometimes deal with information such as the fact someone has potentially had a key compromised that we don’t want to be public until we’ve actually disabled it. Every “keyring push” (as we refer to the process of making a new keyring active) is tagged with the date it was performed. Releases are also tagged with their codenames, to make it easy to do comparisons over time.

Debian archive

This is actually the least important public interface to the keyring, at least from the perspective of the keyring-maint team. No infrastructure makes use of it and while it’s mostly updated when a new keyring is made active we only make a concerted effort to do so when it is coming up to release. It’s provided as a convenience package rather than something which should be utilised for active verification of which keys are and aren’t currently part of the keyring.

Team interface

Master repository: kaufmann.debian.org:/srv/keyring.debian.org/master-keyring.git

The master git repository for keyring maintenance is stored on kaufmann.debian.org AKA keyring.debian.org. This system is centrally managed by DSA, with only DSA and keyring-maint having login rights to it. None of the actual maintenance work takes place here; it is a bare repo providing a central point for the members of keyring-maint to collaborate around.

Private interface

Private working clone

This is where all of the actual keyring work happens. I have a local clone of the repository from kaufmann on a personal machine. The key additions / changes I perform all happen here, and are then pushed to the master repository so that they’re visible to the rest of the team. When preparing to make a new keyring active the changes that have been sent to the HKP interface are copied from kaufmann via scp and folded in using the pull-updates script. The tree is assembled into keyrings with a simple make and some sanity tests performed using make test. If these are successful the sha512sums.txt file is signed using gpg --clearsign and the output copied over to kaufmann. update-keyrings is then called to update the active keyrings (both rsync + HKP). A git push public pushes the changes to the public repository on anonscm. Finally gbp buildpackage --git-builder='sbuild -d sid' tells git-buildpackage to use sbuild to build a package ready to be uploaded to the archive.

Hopefully that helps explain the different stages and outputs of keyring maintenance; I’m aware that it would be a good idea for this to exist somewhere on keyring.debian.org as well and will look at doing so.

Learning to love Ansible

This post attempts to chart my journey towards getting usefully started with Ansible to manage my system configurations. It’s a high level discussion of how I went about doing so and what I got out of it, rather than including any actual config snippets - there are plenty of great resources out there that handle the actual practicalities of getting started much better than I could.

I’ve been convinced about the merits of configuration management for machines for a while now; I remember conversations about producing an appropriate set of recipes to reproduce our haphazard development environment reliably over 4 years ago. That never really got dealt with before I left, and as managing systems hasn’t been part of my day job since then I never got around to doing more than working my way through the Puppet Learning VM. I do, however, continue to run a number of different Linux machines - a few VMs, a hosted dedicated server and a few physical machines at home and my parents’. In particular I have a VM which handles my parents’ email, and I thought that was a good candidate for trying to properly manage. It’s backed up, but it would be nice to be able to redeploy that setup easily if I wanted to move provider, or do hosting for other domains in their own VMs.

I picked Ansible, largely because I wanted something lightweight and the agentless design appealed to me. All I really need to do is ensure Python is on the host I want to manage and everything else I can bootstrap using Ansible itself. Plus it meant I could use the version from Debian testing on my laptop and not require backports on the stable machines I wanted to manage.

My first attempt was to write a single Ansible YAML file which did all the appropriate things for the email VM; installed Exim/Apache/Roundcube, created users, made sure the appropriate SSH keys were in place, installed configuration files, etc, etc. This did the job, but I found myself thinking it was no better than writing a shell script to do the same things.

Things got a lot better when instead of concentrating on a single host I looked at what commonality was shared between hosts. I started with simple things; Debian is my default distro so I created an Ansible role debian-system which configured up APT and ensured package updates were installed. Then I added a task to setup my own account and install my SSH keys. I was then able to deploy those 2 basic steps across a dozen different machine instances. At one point I got an ARM64 VM from Scaleway to play with, and it was great to be able to just add it to my Ansible hosts file and run the playbook against it to get my basic system setup.

Adding email configuration got trickier. In addition to my parents’ email VM I have my own email hosted elsewhere (along with a whole bunch of other users) and the needs of both systems are different. Sitting down and trying to manage both configurations sensibly forced me to do some rationalisation of the systems, pulling out the commonality and then templating the differences. Additionally I ended up using the lineinfile module to edit the Debian supplied configurations, rather than rolling out my own config files. This helped ensure more common components between systems. There were also a bunch of differences that had grown out of the fact each system was maintained by hand - I had about 4 copies of each Let’s Encrypt certificate rather than just putting one copy in /etc/ssl and pointing everything at that. They weren’t even in the same places on different systems. I unified these sorts of things as I came across them.

Throughout the process of this rationalisation I was able to easily test using containers. I wrote an Ansible role to create systemd-nspawn based containers, doing all of the LVM + debootstrap work required to produce a system which could then be managed by Ansible. I then pointed the same configuration as I was using for the email VM at this container, and could verify at each step along the way that the results were what I expected. It was still a little nerve-racking when I switched over the live email config to be managed by Ansible, but it went without a hitch as hoped.

I still have a lot more configuration to switch to being managed by Ansible, especially on the machines which handle a greater number of services, but it’s already proved extremely useful. To prepare for a jessie to stretch upgrade I fired up a stretch container and pointed the Ansible config at it. Most things just worked and the minor issues I was able to fix up in that instance leaving me confident that the live system could be upgraded smoothly. Or when I want to roll out a new SSH key I can just add it to the Ansible setup, and then kick off an update. No need to worry about whether I’ve updated it everywhere, or correctly removed the old one.

So I’m a convert; things were a bit more difficult by starting with existing machines that I didn’t want too much disruption on, but going forward I’ll be using Ansible to roll out any new machines or services I need, and expect that I’ll find that new deployment to be much easier now I have a firm grasp on the tools available.

Just because you can, doesn't mean you should

There was a recent Cryptoparty Belfast event that was aimed at a wider audience than usual; rather than concentrating on how to protect ones self on the internet the 3 speakers concentrated more on why you might want to. As seems to be the way these days I was asked to say a few words about the intersection of technology and the law. I think people were most interested in all the gadgets on show at the end, but I hope they got something out of my talk. It was a very high level overview of some of the issues around the Investigatory Powers Act - if you’re familiar with it then I’m not adding anything new here, just trying to provide some sort of details about why it’s a bad thing from both a technological and a legal perspective.

Going to DebConf 17

Completely forgot to mention this earlier in the year, but delighted to say that in just under 4 weeks I’ll be attending DebConf 17 in Montréal. Looking forward to seeing a bunch of fine folk there!

Outbound:

2017-08-04 11:40 DUB -> 13:40 KEF WW853

2017-08-04 15:25 KEF -> 17:00 YUL WW251

Inbound:

2017-08-12 19:50 YUL -> 05:00 KEF WW252

2017-08-13 06:20 KEF -> 09:50 DUB WW852

(Image created using GIMP, fonts-dkg-handwriting and the DebConf17 Artwork.)

subscribe via RSS