Programming the FST-01 (gnuk) with a Bus Pirate + OpenOCD

Last year at DebConf14 Lucas authorized the purchase of a handful of gnuk devices, one of which I obtained. At the time it only supported 2048 bit RSA keys. I took a look at what might be involved in adding 4096 bit support during DebConf and managed to brick my device several times in doing so. Thankfully gniibe was on hand with his STLinkV2 to help me recover. However subsequently I was loathe to experiment further at home until I had a suitable programmer.

As it is this year has been busy and the 1.1.x release train is supposed to have 4K RSA (as well as ECC) support. DebConf15 is coming up and I felt I should finally sort out playing with the device properly. I still didn’t have a suitable programmer. Or did I? Could my trusty Bus Pirate help?

The FST-01 has an STM32F103TB on it. There is an exposed SWD port. I found a few projects that claimed to do SWD with a Bus Pirate - Will Donnelly has a much cloned Python project, the MC HCK project have a programmer in Ruby and there’s LibSWD though that’s targeted to smarter programmers. None of them worked for me; I could get the Python bits as far as correctly doing the ID of the device, but not reading the option bytes or successfully flashing (though I did manage an erase).

Enter the old favourite, OpenOCD. This already has SWD support and there’s an outstanding commit request to add Bus Pirate support. NodoNogard has a post on using the ST-Link/V2 with OpenOCD and the FST-01 which provided some useful pointers. I grabbed the patch from Gerrit, applied it to OpenOCD git and built an openocd.cfg that contained:

source [find interface/buspirate.cfg]

buspirate_port /dev/ttyUSB0

buspirate_vreg 1

buspirate_mode normal

transport select swd

source [find target/stm32f1x.cfg]

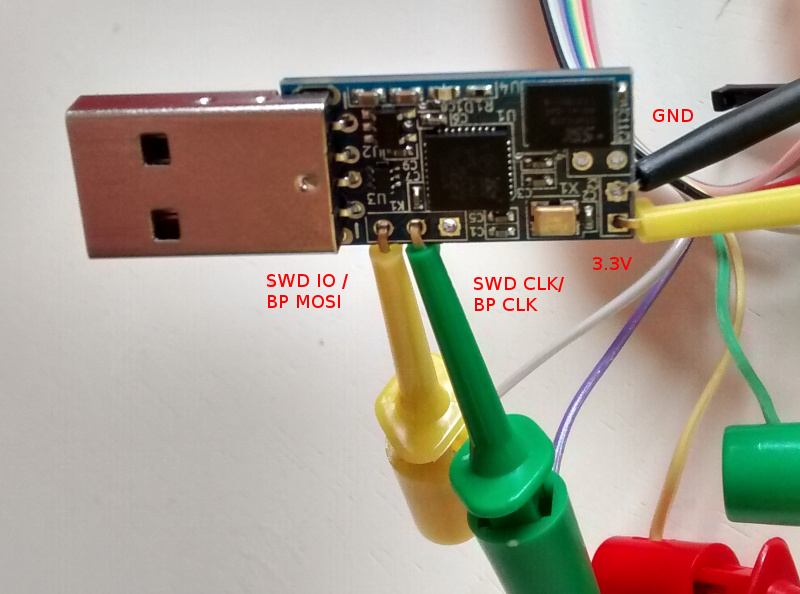

My BP has the Seeed Studio probe cable, so my hookups look like this:

That’s BP MOSI (grey) to SWD IO, BP CLK (purple) to SWD CLK, BP 3.3V (red) to FST-01 PWR and BP GND (brown) to FST-01 GND. Once that was done I fired up OpenOCD in one terminal and did the following in another:

$ telnet localhost 4444

Trying ::1...

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

Open On-Chip Debugger

> reset halt

target state: halted

target halted due to debug-request, current mode: Thread

xPSR: 0x01000000 pc: 0xfffffffe msp: 0xfffffffc

Info : device id = 0x20036410

Info : SWD IDCODE 0x1ba01477

Error: Failed to read memory at 0x1ffff7e2

Warn : STM32 flash size failed, probe inaccurate - assuming 128k flash

Info : flash size = 128kbytes

> stm32f1x unlock 0

Device Security Bit Set

stm32x unlocked.

INFO: a reset or power cycle is required for the new settings to take effect.

> reset halt

target state: halted

target halted due to debug-request, current mode: Thread

xPSR: 0x01000000 pc: 0xfffffffe msp: 0xfffffffc

> flash write_image erase /home/noodles/checkouts/gnuk/src/build/gnuk.elf

auto erase enabled

wrote 109568 bytes from file /home/noodles/checkouts/gnuk/src/build/gnuk.elf in 95.055603s (1.126 KiB/s)

> stm32f1x lock 0

stm32x locked

> reset halt

target state: halted

target halted due to debug-request, current mode: Thread

xPSR: 0x01000000 pc: 0x08000280 msp: 0x20005000

Then it was a matter of disconnecting the gnuk from the BP, plugging it into my USB port and seeing it come up successfully:

usb 1-2: new full-speed USB device number 11 using xhci_hcd

usb 1-2: New USB device found, idVendor=234b, idProduct=0000

usb 1-2: New USB device strings: Mfr=1, Product=2, SerialNumber=3

usb 1-2: Product: Gnuk Token

usb 1-2: Manufacturer: Free Software Initiative of Japan

usb 1-2: SerialNumber: FSIJ-1.1.7-87063020

usb 1-2: ep 0x82 - rounding interval to 1024 microframes, ep desc says 2040 microframes

More once I actually have a 4K key loaded on it.

Recovering a DGN3500 via JTAG

Back in 2010 when I needed an ADSL2 router in the US I bought a Netgear DGN3500. It did what I wanted out of the box and being based on a MIPS AR9 (ARX100) it seemed likely OpenWRT support might happen. Long story short I managed to overwrite u-boot (the bootloader) while flashing a test image I’d built. I ended up buying a new router (same model) to get my internet connection back ASAP and never getting around to fully fixing the broken one. Until yesterday. Below is how I fixed it; both for my own future reference and in case it’s of use any any other unfortunate soul.

The device has clear points for serial and JTAG and it was easy enough (even with my basic soldering skills) to put a proper header on. The tricky bit is that the flash is connected via SPI, so it’s not just a matter of attaching JTAG, doing a scan and reflashing from the JTAG tool. I ended up doing RAM initialisation, then copying a RAM copy of u-boot in and then using that to reflash. There may well have been a better way, but this worked for me. For reference the failure mode I saw was an infinitely repeating:

ROM VER: 1.1.3

CFG 05

My JTAG device is a Bus Pirate v3b which is much better than the parallel port JTAG device I built the first time I wanted to do something similar. I put the latest firmware (6.1) on it.

All of this was done from my laptop, which runs Debian testing (stretch). I used the OpenOCD 0.9.0-1+b1 package from there.

Daniel Schwierzeck has some OpenOCD scripts which include a target definition for the ARX100. I added a board definition for the DGN3500 (I’ve also send Daniel a patch to add this to his repo).

I tied all of this together with an openocd.cfg that contained:

source [find interface/buspirate.cfg]

buspirate_port /dev/ttyUSB1

buspirate_vreg 0

buspirate_mode normal

buspirate_pullup 0

reset_config trst_only

source [find openocd-scripts/target/arx100.cfg]

source [find openocd-scripts/board/dgn3500.cfg]

gdb_flash_program enable

gdb_memory_map enable

gdb_breakpoint_override hard

I was then able to power on the router and type dgn3500_ramboot into the OpenOCD session. This fetched my RAM copy of u-boot from dgn3500_ram/u-boot.bin, copied it into the router’s memory and started it running. From there I had a u-boot environment with access to the flash commands and was able to restore the original Netgear image (and once I was sure that was working ok I subsequently upgraded to the Barrier Breaker OpenWRT image).

What Jonathan Did Next

While I mentioned last September that I had failed to be selected for an H-1B and had been having discussions at DebConf about alternative employment, I never got around to elaborating on what I’d ended up doing.

Short answer: I ended up becoming a law student, studying for a Masters in Legal Science at Queen’s University Belfast. I’ve just completed my first year of the 2 year course and have managed to do well enough in the 6 modules so far to convince myself it wasn’t a crazy choice.

Longer answer: After Vello went under in June I decided to take a couple of months before fully investigating what to do next, largely because I figured I’d either find something that wanted me to start ASAP or fail to find anything and stress about it. During this period a friend happened to mention to me that the applications for the Queen’s law course were still open. He happened to know that it was something I’d considered before a few times. Various discussions (some of them over gin, I’ll admit) ensued and I eventually decided to submit an application. This was towards the end of August, and I figured I’d also talk to people at DebConf to see if there was anything out there tech-wise that I could get excited about.

It turned out that I was feeling a bit jaded about the whole tech scene. Another friend is of the strong opinion that you should take a break at least every 10 years. Heeding her advice I decided to go ahead with the law course. I haven’t regretted it at all. My initial interest was largely driven by a belief that there are too few people who understand both tech and law. I started with interests around intellectual property and contract law as well as issues that arise from trying to legislate for the global nature of most tech these days. However the course is a complete UK qualifying degree (I can go on to do the professional qualification in NI or England & Wales) and the first year has been about public law. Which has been much more interesting than I was expecting (even, would you believe it, EU law). Especially given the potential changing constitutional landscape of the UK after the recent general election, with regard to talk of repeal of the Human Rights Act and a referendum on exit from the EU.

Next year will concentrate more on private law, and I’m hoping to be able to tie that in better to what initially drove me to pursue this path. I’m still not exactly sure which direction I’ll go once I complete the course, but whatever happens I want to keep a linkage between my skill sets. That could be either leaning towards the legal side but with the appreciation of tech, returning to tech but with the appreciation of the legal side of things or perhaps specialising further down an academic path that links both. I guess I’ll see what the next year brings. :)

I should really learn systemd

As I slowly upgrade all my machines to Debian 8.0 (jessie) they’re all ending up with systemd. That’s fine; my laptop has been running it since it went into testing whenever it was. Mostly I haven’t had to care, but I’m dimly aware that it has a lot of bits I should learn about to make best use of it.

Today I discovered systemctl is-system-running. Which I’m not sure why I’d use it, but when I ran it it responded with degraded. That’s not right, thought I. How do I figure out what’s wrong? systemctl --state=failed turned out to be the answer.

# systemctl --state=failed

UNIT LOAD ACTIVE SUB DESCRIPTION

● systemd-modules-load.service loaded failed failed Load Kernel Modules

LOAD = Reflects whether the unit definition was properly loaded.

ACTIVE = The high-level unit activation state, i.e. generalization of SUB.

SUB = The low-level unit activation state, values depend on unit type.

1 loaded units listed. Pass --all to see loaded but inactive units, too.

To show all installed unit files use 'systemctl list-unit-files'.

Ok, so it’s failed to load some kernel modules. What’s it trying to load? systemctl status -l systemd-modules-load.service led me to /lib/systemd/systemd-modules-load which complained about various printer modules not being able to be loaded. Turned out this was because CUPS had dropped them into /etc/modules-load.d/cups-filters.conf on upgrade, and as I don’t have a parallel printer I hadn’t compiled up those modules. One of my other machines had also had an issue with starting up filesystem quotas (I think because there’d been some filesystems that hadn’t mounted properly on boot - my fault rather than systemd). Fixed that up and then systemctl is-system-running started returning a nice clean running.

Now this is probably something that was silently failing back under sysvinit, but of course nothing was tracking that other than some output on boot up. So I feel that I’ve learnt something minor about systemd that actually helped me cleanup my system, and sets me in better stead for when something important fails.

Stepping down from SPI

I was first elected to the Software in the Public Interest board back in 2009. I was re-elected in 2012. This July I am up for re-election again. For a variety of reasons I’ve decided not to stand; mostly a combination of the fact that I think 2 terms (6 years) is enough in a single stretch and an inability to devote as much time to the organization as I’d like. I mentioned this at the May board meeting. I’m planning to stay involved where I can.

My main reason for posting this here is to cause people to think about whether they might want to stand for the board. Nominations open on July 1st and run until July 13th. The main thing you need to absolutely commit to is being able to attend the monthly board meeting, which is held on IRC at 20:30 UTC on the second Thursday of the month. They tend to last at most 30 minutes. Of course there’s a variety of tasks that happen in the background, such as answering queries from prospective associated projects or discussing ongoing matters on the membership or board lists depending on circumstances.

It’s my firm belief that SPI do some very important work for the Free software community. Few people realise the wide variety of associated projects. SPI offload the boring admin bits around accepting donations and managing project assets (be those machines, domains, trademarks or whatever), leaving those projects able to concentrate on the actual technical side of things. Most project members don’t realise the involvement of SPI, and that’s largely a good thing as it indicates the system is working. However it also means that there can sometimes be a lack of people wanting to stand at election time, and an absence of diversity amongst the candidates.

I’m happy to answer questions of anyone who might consider standing for the board; #spi on irc.oftc.net is a good place to ask them - I am there as Noodles.

subscribe via RSS